From feedback to action: Revision of Evaluation and Assessment framework

After nearly a decade, our Evaluation and Assessment (E&A) framework is getting a thoughtful refresh. The goal is simple: clearer signals, less noise, and faster paths from feedback to action. We’ve removed questions already captured at registration, aligned with accreditation formats, and focused every item on evidence you can use to improve teaching and curriculum.

What’s new?

- Confidence before and after

Confidence ratings are now paired with key learning objectives in both the pre- and post-course surveys. This captures baseline confidence and change after the course, giving a clear read on learning impact and readiness to apply in the clinical setting. - Frequency of use (practice context)

A concise frequency question asks how often participants use the targeted skills or concepts in practice. This anchors results in real-world context and helps interpret confidence and outcome data. - Open text with a purpose

Short, targeted prompts now ask about enablers, barriers, and concrete improvement ideas, turning comments into next steps you can act on. - Barrier rating

In the follow-up survey (commitment-to-change), participants rate the severity of barriers to applying their learning. These ratings surface both systemic and context-specific obstacles that impede implementation. Findings trigger refinements to content and delivery. - Aligned and lean

A streamlined, standardized question set mirrors continuing medical education wording where appropriate, reduces survey time, and makes results comparable across events.

Why this matters

- Tangible outcomes

Tracking confidence pre- and post-course shows baseline competence, highlights where reinforcement is needed, and pinpoints confidence gains after the event. - Richer insight

Open text reveals the why behind scores, surfacing practical ideas to refine programs. - Real-world relevance

Frequency data grounds results in everyday practice, clarifying where improvements will have the greatest impact. - Better experience

A consistent, shorter survey journey respects participants’ time and improves data quality. - Stronger reports

Clean, comparable data powers clearer, more actionable reports that guide teaching decisions, curriculum updates, and program planning.

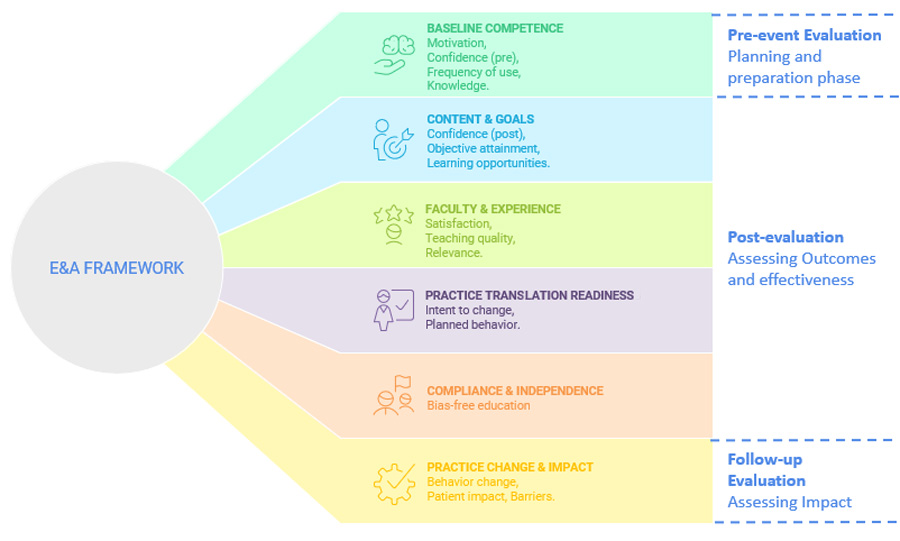

How it works (at a glance)

- Pre-course

Baseline motivation, confidence, frequency of use, and prior knowledge (i.e., for curriculum-based programs), inputs to calibrate emphasis and methods. - Post-course

Achievement of objectives, post-course confidence, and concise open text on what to keep or change. - Follow-up (3 months after the event)

Commitment to change, what was applied, and barrier rating.

Quality and testing

The revision followed a multi-stage process: stakeholder needs assessment, external expert review, internal SME workshops, cognitive interviews with surgeons, and a live pilot. Findings showed balanced item performance and positive clarity ratings; items were refined and advanced without systemic issues.

What’s next?

The revised E&A framework launches at Davos Courses 2025 (pre- and post-course). Expansion to all events will proceed once the data collection platform is operational.