How pre-event assessment and post-event evaluation help to deliver best quality education

The AO has a system in place to collect and analyze data on all educational activities in order to determine the effectiveness of educational events and improve the quality of education globally.

Pre-Event Participant Data Report

This report is shared before each educational event and serves to help chairpersons and faculty adapt course content to best meet participants’ needs. It is also an educational tool for participants to engage with the learning before the course and to predispose them to learning. For curriculum-based courses, multiple- choice questions for each competency have been created and the results are shown in this report.

The Pre-Event Participant Data Report includes participant profiles, a gap/motivation analysis, and when available the multiple-choice questions results.

Chairpersons are encouraged to share this information before the course and during the pre-course with faculty members to help with their planning.

To boost data collection, the AO Education Institute recommends that chairpersons prepare a brief e-mail to communicate the importance of the pre-event assessment to the course participants before the process begins (40 days before an event). Course organizers are happy to send this letter to the participants on chairpersons’ behalf.

What actions can faculty take based on the results of the pre-event assessment report?

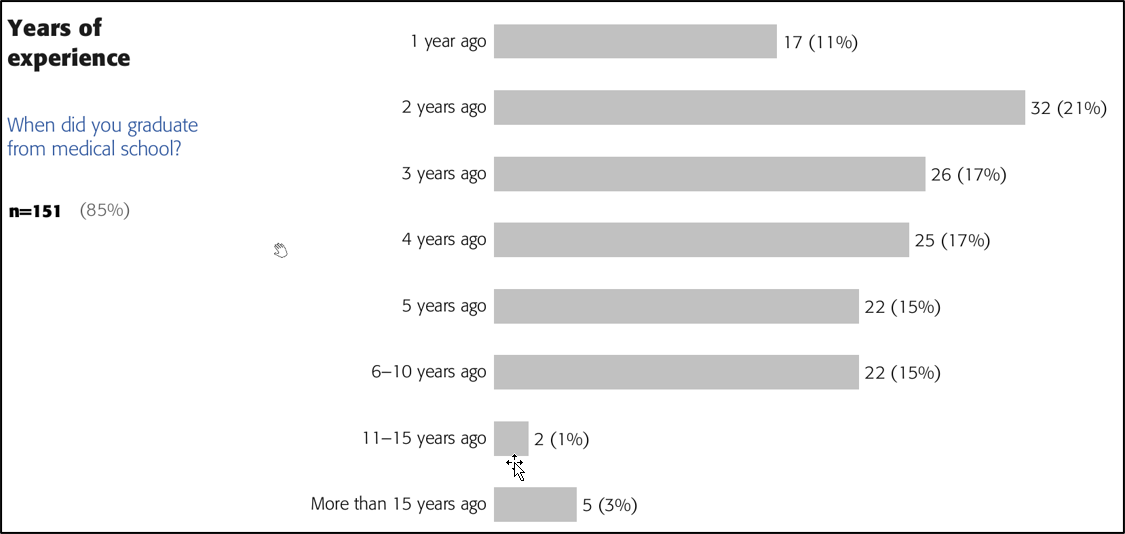

Example 1: participants with extremely different experience levels

Interpretation: Around 80% of the participants are residents while the remaining have more experience, with 5 individuals being highly experienced surgeons. Since the course content is tailored for junior surgeons, the more experienced surgeons could, for example, lose motivation or dominate the case discussions.

How can faculty involve the experienced surgeons without letting them dominate?

For example, identify the experienced surgeons and observe their behavior. If they are bored, consider engaging them by asking more challenging questions now and then or by raising the complexity level of one case in the case discussions. It may also work to get the experienced participants to explain certain points to their colleagues.

If, instead, the experienced surgeons are dominating the discussions, try to bring the discussion back to the basics and involve the more junior participants. When the basics are covered, faculty can go back to the more experienced surgeons. The difficult part is keeping a balance and trying to fulfill the needs of all participants. Think carefully about grouping participants according to their level of experience or expertise.

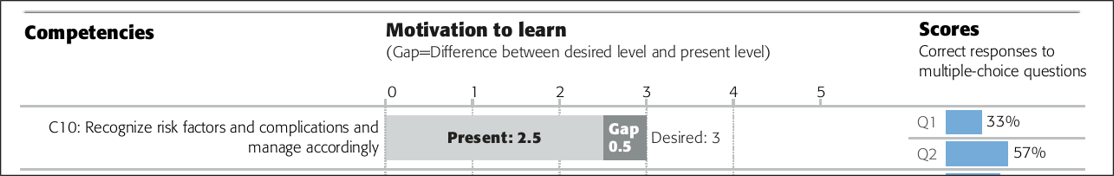

Example 2: small gap and low level of desired ability

Interpretation: Participants do not perceive this topic as relevant or interesting enough (desired level is only 3 and the gap is small), so they do not have a high motivation to learn. The multiple-choice question scores show that they likely don't know much about this topic.

How can faculty increase participants' motivation to learn?

One option is to start by showing a case that failed because the risk factors and complications were not recognized appropriately and therefore it was managed badly. This can be done at the beginning of a lecture or in small group discussions. It may be necessary also to reinforce this topic throughout the course (lectures, small group discussions, and practical exercises).

Example 3: small gap and high level of present ability

Interpretation: Participants perceive this as a relevant topic and they think they already know a lot (present level of ability is 4). The multiple-choice assessment questions suggest that their self-assessment of ability is appropriate. The gap is small and therefore the motivation to learn more about this topic is relatively low.

How can faculty increase participants' motivation to learn?

Try using more complex cases in small group discussions or at the beginning of the lecture on the topic.

Example 4: low perceived level of present ability and high level of desired ability

Interpretation: Participants perceive their own level of ability in this topic as very low, but the topic is very relevant to them. Therefore, the gap is very large, which can lead to anxiety for some learners. The multiple-choice questions, on the other hand, indicate that their level of knowledge is higher than they think.

How can faculty help learners overcome their anxiety and understand that they know more than they think?

Start with an easy case in the lecture or small group discussion, help learners with a stepwise approach, proceeding from simple to more complex information until they recognize that they know more than they thought.

Post-Event Evaluation Report

This report is shared after each educational event and serves to estimate the educational impact, how well the learning objectives were met, if learning occurred (post-event gap analysis), how the faculty performed, and the perception of commercial bias. It is also an educational tool to promote reflection by participants.

This information serves to evaluate the specific event and to make any modifications for future events. In addition, it enables the clinical divisions to share information with their councils and regional boards, to better understand the unique needs in the regions, and enhance future learning activities.

The aggregated data is also used by curriculum developers to monitor new curriculum performance and implementation, to identify trends, and to adapt or develop new curricula.

Individual Faculty Report

This report is optional, and chairpersons should ask the AO event owners for support to request the reports for each event. During the educational event, the participants rate:

- How useful the presentation topic was to their daily practice (content evaluation)

- How effective the faculty members were in their roles (performance evaluation)

Every faculty member receives a confidential personalized report that includes:

- Impact of the overall event

- The degree to which event objectives have been met

- Average of individual performance compared to overall faculty average

- Individual performance rating categorized by lecture, discussion groups, and practical (content and faculty)

Commitment to Change Outcome Report

This report is optional, and chairpersons should ask the AO event owner for support to request the reports for each event. Chairpersons receive this report 4 months after the event concludes. The Commitment to Change tool serves to gather intended changes in practice, the change implementation status, and possible obstacles to implementation. This is a practical approach to facilitate and measure behavioral changes and transfer of learning resulting from attending an educational event. In addition, it encourages reflection that is an essential aspect of learning.

For more information about the development of the evaluation and assessment system read the article, Designing and Implementing a Harmonized Evaluation and Assessment System for Educational Events Worldwide.